GATK SPARK 环境安装 加速GATK

GATK SPARK 环境安装

1.安装Scala 并设置环境变量

curl -fL https://github.com/coursier/coursier/releases/latest/download/cs-x86_64-pc-linux.gz | gzip -d > cs && chmod +x cs && ./cs setup --install-dir /share/biosoft/scala/bin

export PATH=/share/biosoft/scala/bin:$PATH

2, 安装Spark 并设置环境变量:

wget https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-4.0.1/spark-4.0.1-bin-hadoop3.tgz

tar zxvf spark-4.0.1-bin-hadoop3.tgz

export SPARK_HOME=/share/biosoft/spark/spark-4.0.1-bin-hadoop3

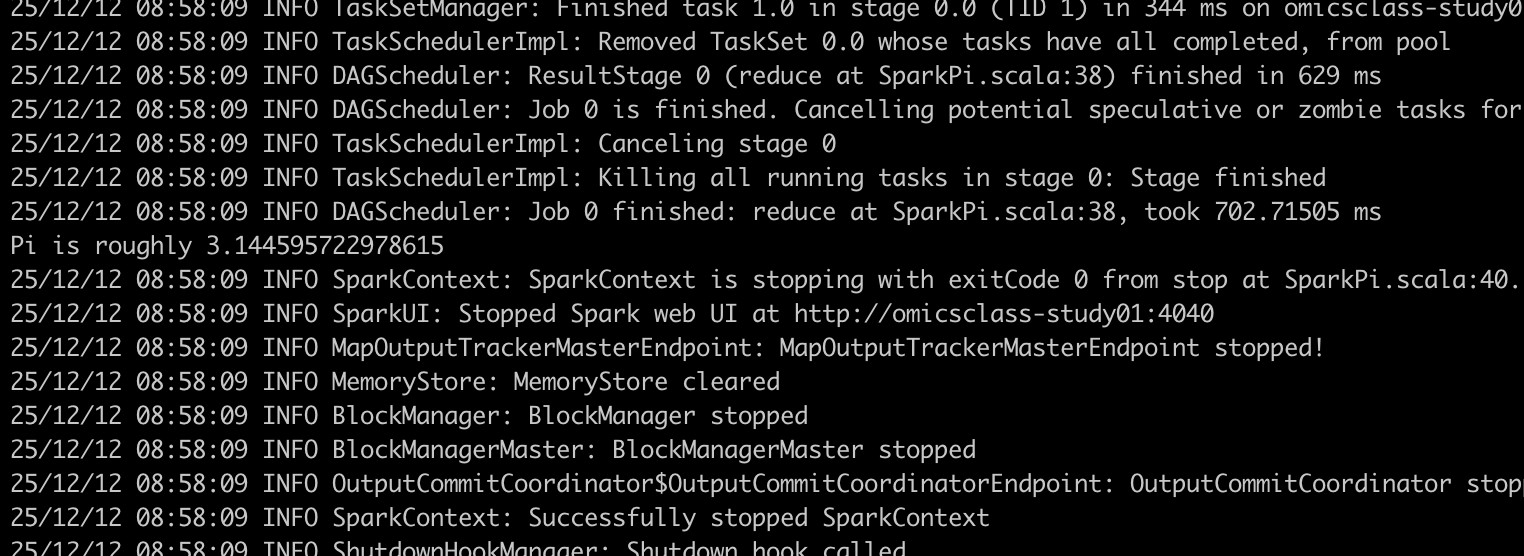

export PATH=$PATH:$SPARK_HOME/bin ./run-example SparkPi # 测试Pi 计算

3.设置 spark java环境; 版本不要太高,23 会报错

cp conf/spark-env.sh.template conf/spark-env.sh #添加下面的行

export SCALA_HOME=/share/biosoft/scala/bin/

export JAVA_HOME=/share/biosoft/java/jdk-17.0.9/

export SPARK_HOME=/share/biosoft/spark/spark-4.0.1-bin-hadoop3

4. 运行GATK spark版本:

gatk --java-options '-Xmx100g' HaplotypeCallerSpark --spark-master local[20] \

-R Arabidopsis_thaliana.TAIR10.dna.chromosome.4.fa \

-I p1.sorted.dedup.bam \

-O p1.g.vcf.gz --max-alternate-alleles 4 --sample-ploidy 2 -ERC GVCF --tmp-dir tmp

- 发表于 2025-12-11 15:22

- 阅读 ( 309 )

- 分类:重测序

你可能感兴趣的文章

- GATK|Timeout of 30000 milliseconds was reached while trying to acquire a lock on file raw.vcf.tmp.idx. 1867 浏览

- GATK报错:Could not find walker with name: VariantFiltration 2074 浏览

- GATK报错| this implies a problem with the file locking support in your operating system 2566 浏览

- 多款软件进行vcf合并--gatk、vcftools、bcftools 10326 浏览

- GATK外显子测序数据肿瘤体细胞突变数据分析 5528 浏览

0 条评论

请先 登录 后评论